Are power laws good for anything?

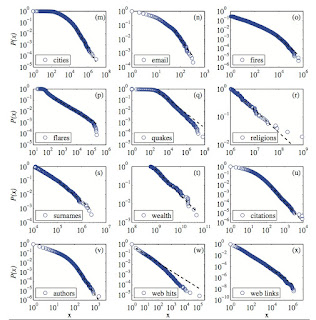

It is rather amazing that many complex systems, ranging from proteins to stock markets to cities, exhibit power laws, sometimes over many decades. A critical review is here , which contains the figure below. Complexity theory makes much of these power laws. But, sometimes I wonder what the power laws really tell us, and particularly whether for social and economic issues they are good for anything. Recently, I learnt of a fascinating case. Admittedly, it does not rely on the exact mathematical details (e.g. the value of the power law exponent!). The case is described in an article by Dudley Herschbach , Understanding the outstanding: Zipf's law and positive deviance and in the book Aid at the Edge of Chaos , by Ben Ramalingam. Here is the basic idea. Suppose that you have a system of many weakly interacting (random) components. Based on the central limit theorem one would expect that a particular random variable would obey a normal (Gaussian) distribution. This means