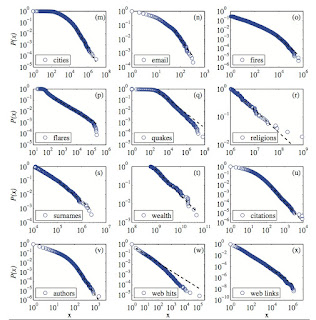

A critical review is here, which contains the figure below.

Complexity theory makes much of these power laws.

But, sometimes I wonder what the power laws really tell us, and particularly whether for social and economic issues they are good for anything.

Recently, I learnt of a fascinating case. Admittedly, it does not rely on the exact mathematical details (e.g. the value of the power law exponent!).

The case is described in an article by Dudley Herschbach,

Understanding the outstanding: Zipf's law and positive deviance

and in the book Aid at the Edge of Chaos, by Ben Ramalingam.

Here is the basic idea. Suppose that you have a system of many weakly interacting (random) components. Based on the central limit theorem one would expect that a particular random variable would obey a normal (Gaussian) distribution. This means that large deviations from the mean are extremely unlikely. However, now suppose that the system is "complex" and the components are strongly interacting. Then the probability distribution of the variable may obey a power law. In particular, this means that large deviations from the mean can have a probability that is orders of magnitude larger than they would be if the distribution was "normal".

Now, lets make this concrete. Suppose one goes to a poor country and looks at the weight of young children. One will find that the average weight is significantly smaller than in an affluent country, and most importantly the average less than is healthy for brain and physical development. These low weights arise from a complex range of factors related to poverty: limited money to buy food, lack of diversity of diet, ignorance about healthy diet and nutrition, famines, giving more food to working members of the family, ...

However, if the weights of children obeys a power law, rather than a normal, distribution one might be hopeful that one could find some children who have a healthy weight and investigate what factors contribute to that. This leads to the following.

Positive Deviance (PD) is based on the observation that in every community there are certain individuals or groups (the positive deviants), whose uncommon but successful behaviors or strategies enable them to find better solutions to a problem than their peers. These individuals or groups have access to exactly the same resources and face the same challenges and obstacles as their peers.

The PD approach is a strength-based, problem-solving approach for behavior and social change. The approach enables the community to discover existing solutions to complex problems within the community.

The PD approach thus differs from traditional "needs based" or problem-solving approaches in that it does not focus primarily on identification of needs and the external inputs necessary to meet those needs or solve problems. A unique process invites the community to identify and optimize existing, sustainable solutions from within the community, which speeds up innovation.

The PD approach has been used to address issues as diverse as childhood malnutrition, neo-natal mortality, girl trafficking, school drop-out, female genital cutting (FGC), hospital acquired infections (HAI) and HIV/AIDS.