I often read in physical chemistry papers statements along the line of "a major puzzle is the extremely high mobility of protons and hydroxide ions in liquid water ..... explaining this leads to consideration of non-diffusive transport mechanisms such as the Grotthuss mechanism."

Furthermore, a physics paper, Ice: a strongly correlated system, cited by field theory enthusiasts [gauge theories, deconfinement, ....], states

ice exhibits a high static permittivity comparable with the one of liquid water, and electrical mobility that is large when compared to most ionic conductors. In fact, the mobility is comparable to the electronic conduction in metals.

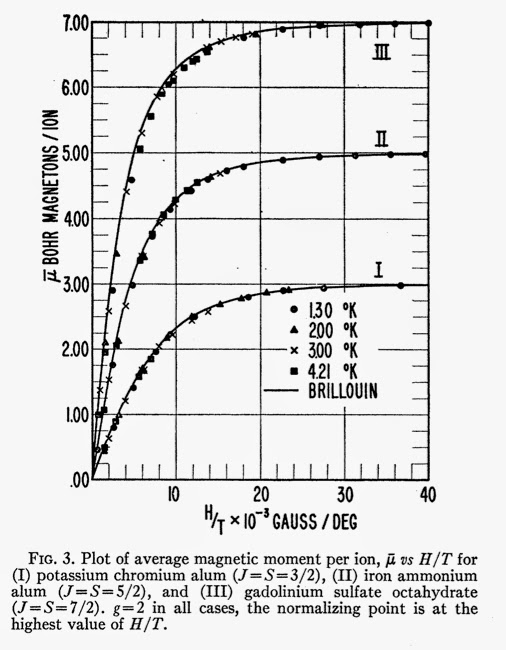

Thus, we see that the mobility of H+ and OH- (hydroxide) is about 3-7 times larger than that of other charged ions. This is hardly a gigantic effect!

Now, suppose the transport proceeds via diffusion. Then one can use the Stokes-Einstein relation to estimate the mobility of an ion in terms of the viscosity of the solvent (water). This means the mobility scales inversely with the "hydrodynamic radii" of the diffusing particle. Atkins shows that this leads to reasonable estimates of the mobility [for ions in the bottom three lines to the Table above] with "hydrodynamic radii" of about 2 Angstroms.

But, H+ will be bound to H2O to form H3O+ which will be even larger thank K+ and so the mobility should be even smaller than for K+, not larger. OH- should be comparable.

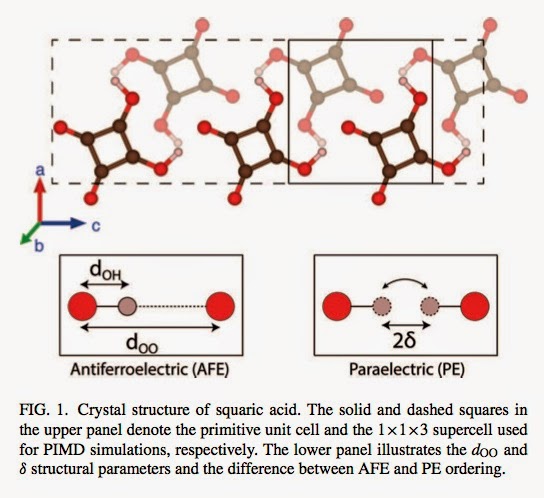

Furthermore, the mobility of protons in ice would be dramatically less, since the solvent is no longer a fluid. However, the mobility at -5 degrees C is only a factor of six less than a 25 degrees C, as reported here.

Hence, it seems that proton transport cannot proceed by diffusion and it is necessary to consider alternative mechanisms such as the Grotthuss one.

Next, I should comment on the absolute magnitude of the mobility compared to a simple lower bound for coherent "hopping" transport, e^2 a^2/h ~ 1 V/(cm^2 sec), characteristic of energy bands. The value for H+ and OH- are about a factor of 30-50 smaller than this lower bound. Thus, water and ice are not even bad metals. Thus the claim in the physics paper of mobility comparable to electronic conduction in a metal is wrong.